Finding the Children in Abuse Media - Using Automated Age Estimation to Triage Investigative Media

Mission Area

Law Enforcement

Please note: This case study contains discussion of indecent images of children, and of child abuse. If you would prefer not to be exposed to this, please consider returning to the homepage.

Problem

- This material has become an industry, with advertisement, organised groups and series similarly found in lawful media production, but behind every video is a victim who is totally powerless, and a contact abuser deserving of prosecution.

- The task of identifying each victim is manual, falling upon specially trained officers who are subjected to thousands of hours of material in an attempt to identify whether material contains indecent images of a minor, and to seek leads that can help rescue that victim.

- A core component of establishing the illegality of the media is confirming an accurate estimate of the ages of those present; the process is a professional, but no less heartbreaking one.

- Each year millions of images and hundreds of thousands of hours of abuse material is intercepted and seized by UK law-enforcement, and the rate of production is increasing.

Solution

We have been working with expert officers at a UK agency (due to the sensitives involved in their work they have asked to remain anonymous) to explore how our technologies can help their officers identify victims at risk, and ensure the right jurisdiction is notified of their peril. One particular challenge for this use case is the balancing of error rates: our partners are keen not to miss any potential children, while reducing the amount of time that their officers have to spend poring over this heinous material. The uncomfortable truth of this is that many thousands of hours of material are missed every week, as the backlog of human-reviewed media grows, some of which will doubtless contain clearly identifiable victims. The solution to this was to create a system that had a solid basis in the neuroscience of perception, and in the age bands associated with the sentencing guidelines in the UK, but which could communicate to officers not just an estimated age, but a confidence in membership of a protected age band. We agreed with our end-users that the ability to prioritise each video almost instantly, and to be 99.9% certain that the highlighted media contain children would vastly increase the officer’s ability to triage those media.

We developed an AI technology to automatically detect and recognise the age of faces within media, in a similar way that facial recognition technology operates. This technology is as accurate as those expert officers, and can automatically extract all of the instances of each face within a video, aggregate the different 3D views of a face together, and work out a probability distribution of the subject’s age in years. We can then process this distribution to say how likely it is that a subject is within a particular age band, with incredible accuracy: every single capture that was determined to be a child was correct (from a single video frame; aggregation of these judgements across frames improves the certainty). Our technology only missed around 15% of children in our Challenge Dataset - those which were most ambiguous, and thus require a human rater to judge, could be surfaced as having lower certainty from our analysis.

This technology is much more accurate at determining the exact age of children than it is adults, due to the morphological differences in childrens’ faces as they grow up - an accidental strength of the technology for this particular use case.

Mission Impact

We matched thousands of manual estimates from expert Victim ID Officers against those of the AI on illegal images and videos of children, and were able to show performance comparable to that we achieved in the lab; 100% accuracy for those we declared children, and only a 3% difference in detection rate. What is more, the system can do this in a matter of seconds per video, and can scale out across tens, hundreds, or even thousands of processing nodes to cope with the ever-increasing rate of CSAM production. The system we built for this customer also records the timestamp in the video where observations were made, and how many faces were in the frame, so that officers don’t need to watch the full video to confirm the AI’s judgements.

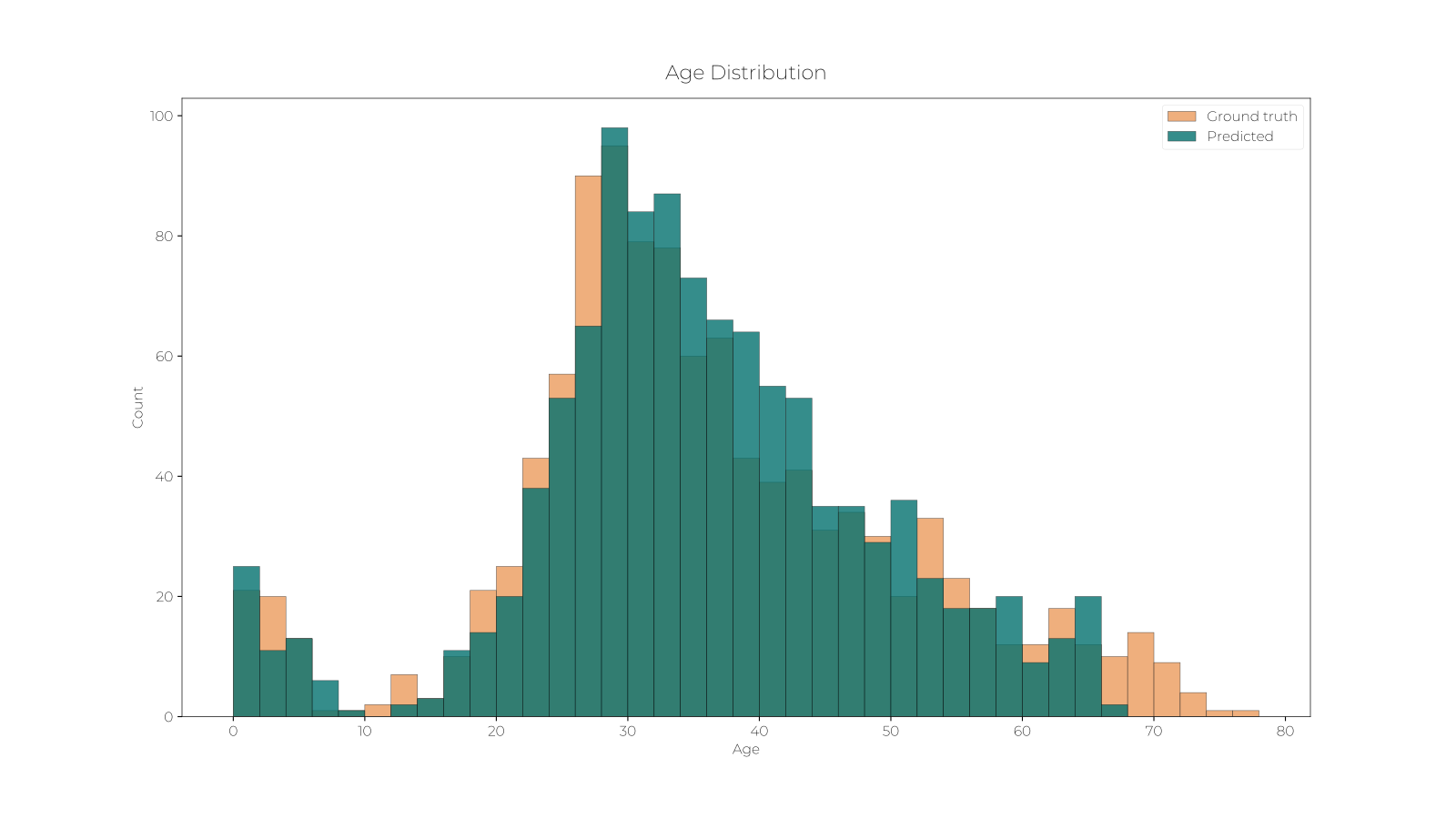

Comparison between a ground truth dataset (human raters of age) and the automated age estimates. The technology is

almost perfectly matched, except for individuals in their later years.

Comparison between a ground truth dataset (human raters of age) and the automated age estimates. The technology is

almost perfectly matched, except for individuals in their later years.

The Problem is Layered, and So Is the Solution

This piece of technology is just one analytic that officers can apply to media to allow them to be more effective in fighting this scourge. Many more, such as automatically identifying the exact camera that captured the media, or attempting to automatically identify logos and hallmarks within imagery, add additional layers of triage support that makes all the difference in finding, and ultimately getting justice for, victims of these heinous crimes. As we are ready, and when the time is right, we will share more that we are doing together to support this mission.